Humans have always been more complex than any one researcher or tool could comprehensively understand, but the challenges IDEO design teams are being asked to explore are only getting gnarlier and more convoluted. We are asking questions at both sides of the scale–from huge systems with so many interconnected parts that framing them in any meaningful way becomes extraordinarily difficult, to the opposite side of the spectrum of the subtly emergent or subconscious individual behaviors that can’t be uncovered by traditional large-scale survey methods.

Such is the problem with traditional processes of discovery and understanding. We need to be able to arrive at both a birds-eye view of the larger systems we are all tangled within, while also understanding the subtle, nuanced human behaviors that both exist within, and drive the functioning of these systems. We need the GPS and the microscope to understand larger, embedded systems without sacrificing the rigor of taking the time to understand the complexities of human behavior.

Any human-centered researcher has likely experienced frustration with the rigid, quantitative methods that provide the scale critical to see, but that lack the depth and nuance needed to make sense of what we’re seeing. AI is finally offering a bridge between these worlds, a technological olive branch of sorts. So, at IDEO, we’re stepping out of the false binary of qualitative vs. quantitative research, and embracing tech-powered mediums to ask new types of questions, scale our notion of context, and leverage bias intentionally in our design work.

Embracing new mediums to ask new types of questions

The questions we can ask and answer in our research, depend on their medium. Qualitative methods afford qualitative questions, and quantitative methods afford quantitative questions. But the things we are designing are sufficiently complex that the questions we need answered are not simple enough for a handful of people to answer or for a superficial data survey to inform. This is why we’re turning to entirely tech-powered tools and mediums to ask (and answer) bigger, bolder, and more deeply human questions.

Framing Great Questions at Scale

In early 2024, in an attempt to craft a strategic anchor for our diverse and divergent portfolio of work, we built a tool called the “Big Questions Bot” to engage conversation around the meaty questions we were grappling with as a company. These questions–about technology’s role in the climate crisis, designing for connection, empowering young people to shape the future–were ones we knew we couldn’t frame alone, so we released our Big Question bot to our network of colleagues, partners, and collaborators to experiment with.

We were, of course, interested in which of our questions was most popular, and where there was most heat for IDEO to explore, but we were more interested in the conversations with, and reframes of, our questions. A traditionally-designed survey would never get to this level of nuance, so we intentionally designed the Big Question Bot to encourage organic, winding, human conversations. The Big Questions Bot opened to a landing page with a set of provocative questions to click into and explore. On the other side of each question was an engaging conversation with our carefully trained chatbot that encouraged users to refine and revise the questions to better suit their current curiosities and concerns. These conversations culminated in a personal design question and a high level brief to begin exploring it whether that be on their own or with IDEO.

This tool was designed to be equal parts community engagement and market intelligence. But more and more people beyond our immediate network started to engage with the site, and, like with any good research tool, they were using it in ways we didn’t anticipate–as a place to play with new ideas, a thought partner to explore their own big questions. The conversational nature of LLMs is what allowed us to capture the organic and human data we wanted (with consent of course), but it was the scale of the tool that showed us what it would look like to use co-design, to collectively frame design questions, not just generate solutions to questions we’d already asked. By using technology to expand our reach, we could engage a broad and diverse audience in framing rich, nuanced, and meaningful questions for design, rather than crowdsourcing solutions against questions we’d framed in isolation.

Redefining Qualitative

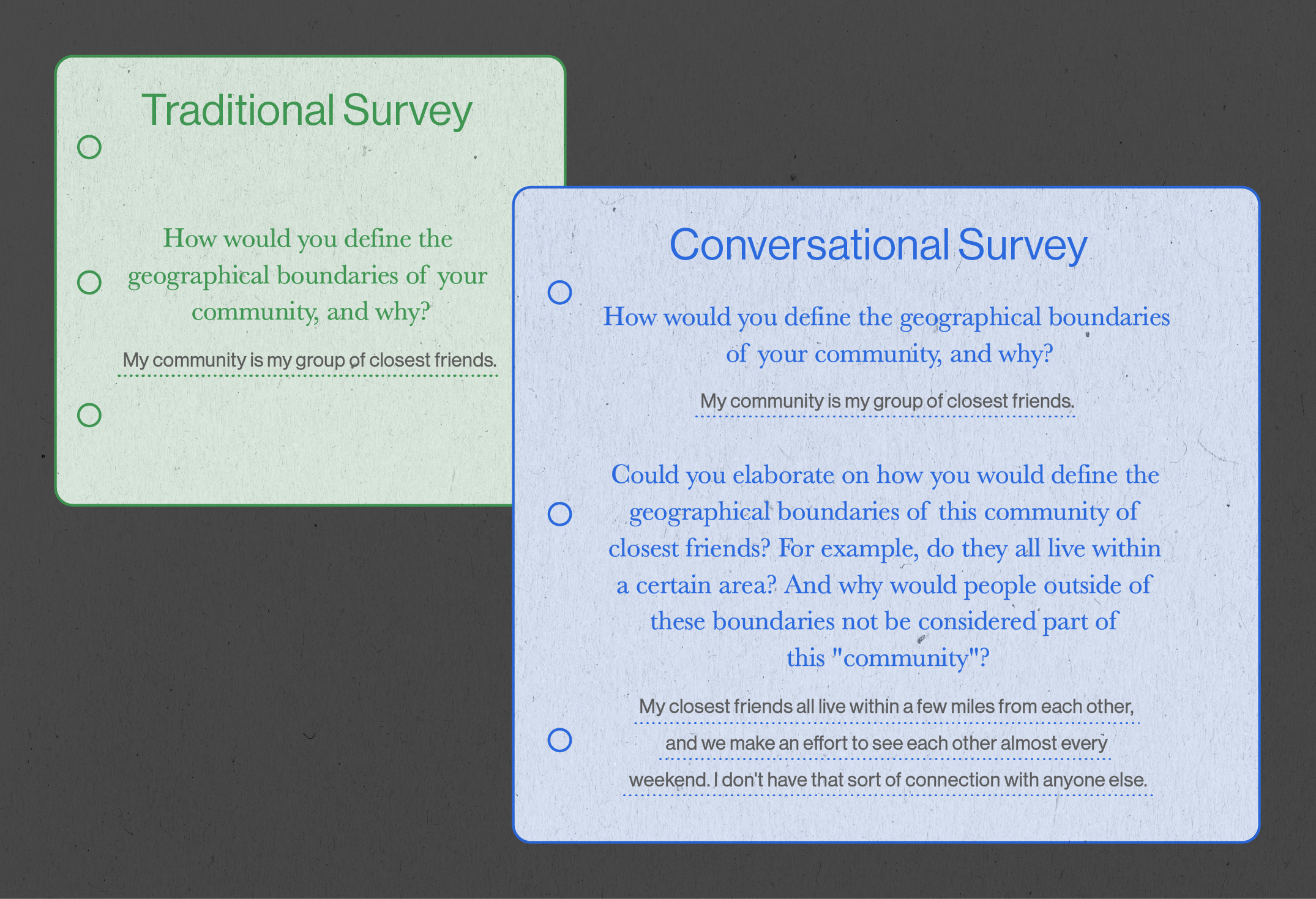

Beyond using technology to scale our approach to question framing, we’re also using it to expand our capacity to gather input for design. While qualitative interviews remain one of the most powerful tools we have for acquainting ourselves with a problem space, an individual participant can’t possibly speak to or even be aware of every subtle pattern, shift, or structure of which they are part. But while traditional open-ended surveys may address parts of this problem, they don’t allow for dynamic follow-ups, or the practiced riffs of an intuitive design researcher, which means that the resulting system-level patterns we detect aren’t always that enlightening.

To bridge this divide, we’ve been experimenting with conversational LLM-powered surveys that are engineered to pursue our research learning goals at scale. Using these tools, we can initiate the survey with a thoughtfully written list of discussion questions, allowing it to adaptively ask follow-up questions in service of our learning goals.

This approach does trade the consistency of controlled measurement for more human responses, but in many cases this is what we need to spark new ideas and encourage imaginative or speculative thinking. While these surveys can’t and shouldn’t replace face-to-face interviews, they’re proving helpful in moments when we need a streamlined approach to both discover dynamic and idiosyncratic human insight, and then generalize that insight across a larger population.

By using technologies like AI to engage in, and make sense of, more human conversations at scale, our design teams are able to ask better questions and glean more multifaceted insights that are greater than the sum of their parts. But, to direct this new, hybrid type of insight towards impact, we need better ways to understand the contexts for our design, because the contexts we’re designing for are growing increasingly messy and tangled.

This means we need to update our notion of context to better reflect peoples’ complex realities. In the past, getting “in context” meant meeting someone in their home or office, shadowing them on their commute or in the grocery store—processes that put the researcher into the lives and habits of the users for which they were designing. However, people exist in more places and in more modalities than in the past, making the process of getting “in context” more ambiguous and harder to access.

Social media feeds are context. The distributed digital worlds of a live action video game is context. Algorithmically-curated search results, reddit groups, spotify recommendations–this is all context. And all pretty challenging to externalize, access, and explore. But, if we want to understand people and design for their needs and behaviors, we need new ways to get into these less tangible worlds to listen, observe, and surface meaningful insight. Technology has proven quite helpful here.

Analyzing data in context

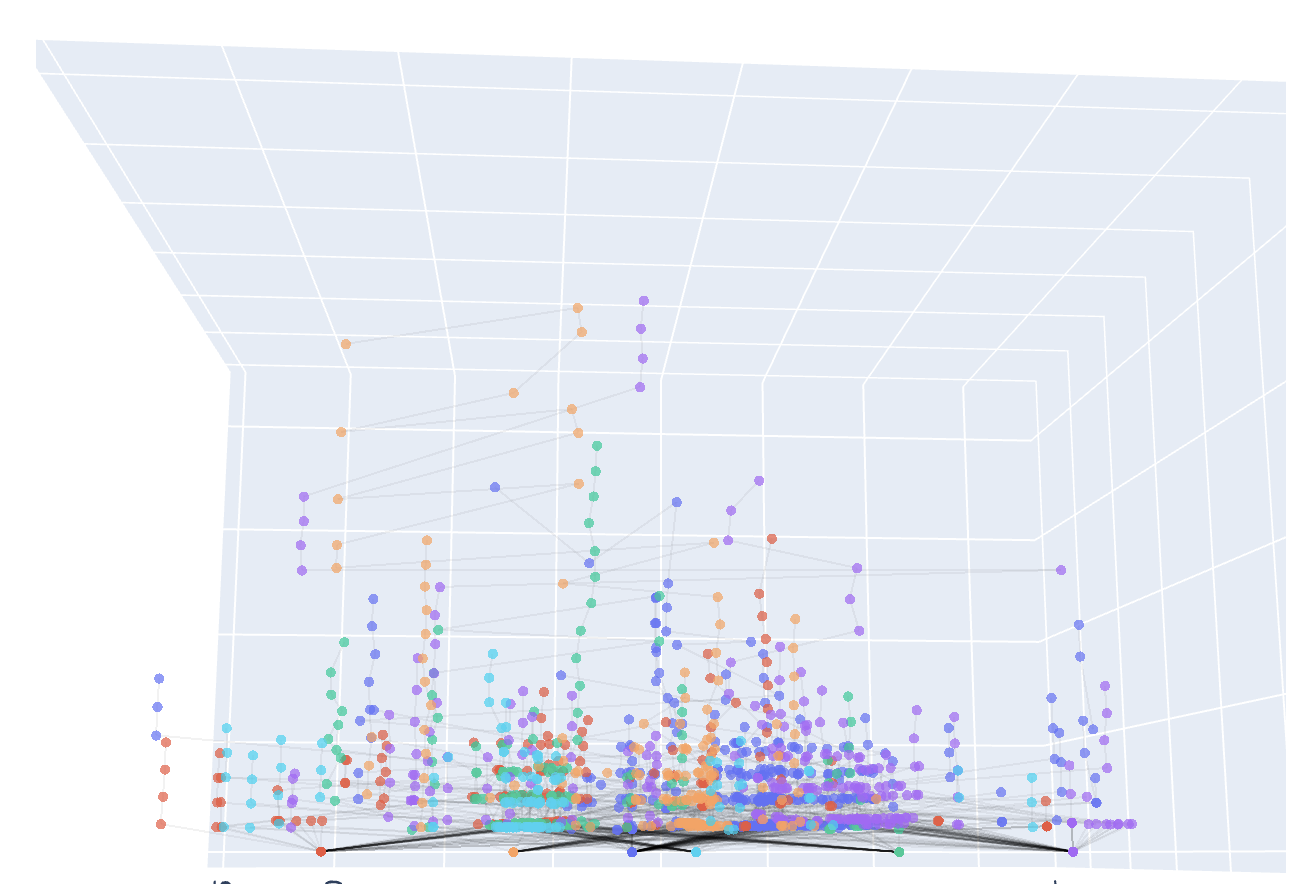

When we built our Big Questions Bot, we knew that data analysis was going to be a challenge. In order for the conversational data we captured to be actionable, we needed to be able to understand each conversation’s unique character and trajectory, as well as its position within, and relationship to, the aggregate conversation across users and across questions. We needed a way to analyze our data within its conversational context, to examine our data in an embodied and human way. To facilitate this, we created a 3D visualization of our user’s iterative conversations. This vectored space was one we could enter and inhabit. It allowed us to orient ourselves to the connections people were making between and across questions and ideas.

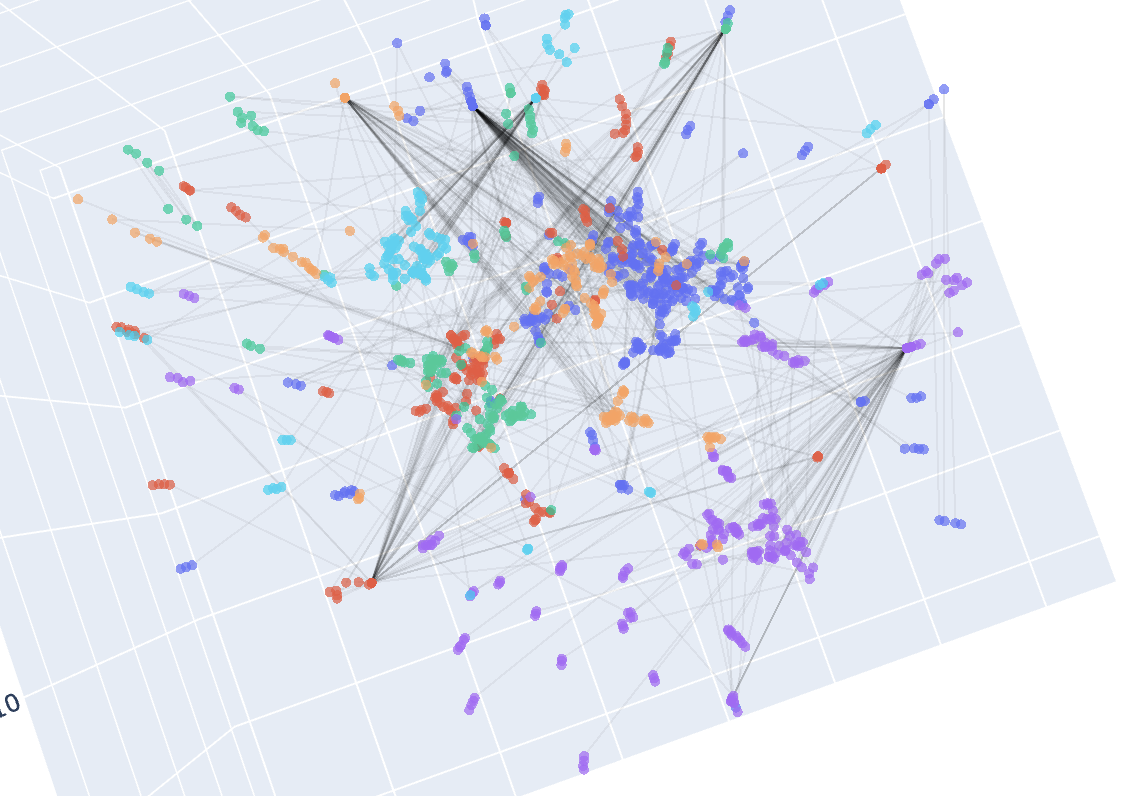

Semantically similar questions appeared closer to each other on the plot’s X-Y plane, which we called the “topical plane,” with the visualization’s third axis representing the chronology of the conversation. Together, this meant we could trace the topical evolution of users’ questions over time and see emergent clusters of hot topics. We noticed that as users conversed, questions started drifting towards the topical intersection of AI, work, and collaboration. Zooming into a cluster from there was like stepping inside the question, walking around, and mining for treasure in an embodied and intuitive way. Beyond elevating central themes, this inhabitable analysis space also allowed us to see and interact with its edges. These edges had been thoughtfully stretched and beautifully deformed by our participant’s conversations, their topical variation visually expanding beyond our initial question framing. This meaningful expansion reinforced for us how collaborative question framing can find edges beyond our line of sight, make connections so subtle they could easily be overlooked, and highlight the abundance of possibility within a question.

We can apply this visual approach to a variety of qualitative datasets in order to engage with individual perspectives within their collective context. For instance, we use similar visualizations to get a sense of the breadth and size of themes surfaced through the conversational qualitative surveys we mentioned earlier. Then, by creating tools that can tag the survey responses that contain a theme we’ve identified, we can uncover what demographics might be correlated with a particular theme, or what themes tend to intersect with each other, which serve as context that helps us understand each individual perspective. This quantitative approach to exploring context borrows some basic principles from statistical inference, but ultimately keeps our human judgment and intuition in the driver’s seat when it comes to interpreting which findings are strategically significant.

Concretizing and Scaling Bias

At IDEO, we’re deeply committed to incorporating a broad range of perspectives into our design process, but equitable design doesn’t eliminate bias from the solution-making process. It can actually introduce more. But, we don’t shy away from bias. Bias is how we express a unique perspective on, or offer a novel solution to, a familiar problem. Bias is what ensures that the outcomes of our design process are markedly new and critically meaningful.

Anyone who has played around with an AI image generator has likely experienced this firsthand. AI models defer to the average, so a generic prompt with no constraints or bias often generates a pretty boring output. The more you guide and direct the AI, the more bias you thoughtfully and intentionally build into your process, the more distinctive and interesting their output becomes. Wielded in this way–thoughtfully and intentionally–bias becomes a feature of the design process, not a flaw to root out. And, it is actually the act of engaging AI in this way that necessitates the thoughtful externalization and application of bias in the design process, transforming unintended bias into a powerful medium for critically human design.

During our work with a multinational company trying to understand what motivated their diverse, globally distributed employees, we experimented with using LLMs to analyze interview transcripts from employee participants across different cultures. Beyond supporting deep analysis at scale, we found that using an LLM in this way had two primary benefits beyond our human-powered analysis control.

The first benefit was that it forced our team to clearly articulate and externalize our inherent biases and be thoughtful about which ones we wanted to apply to our analysis. To train an AI to recognize and analyze motivation, we first had to ask and answer hard questions like, “How do we define ‘motivation’ and what are the signs and signals we use to recognize it?” Of course, our definition was biased, but that bias had been externalized and examined so it could be thoughtfully and systematically applied. If we were optimizing for a consistent analysis, we could control variation by applying a single bias to the entire dataset, which wouldn’t be possible if multiple humans were splitting up the data and each evaluating a part of it. We could also play with the definition being applied, tweaking the LLM’s prompts to prototype how changes in the foundational bias might impact our analysis and thus interpretation of the data. This strategic application of bias via AI-supported data analysis means that we can be both confident that we’re thoughtfully wielding bias in our analysis, and able to quantify how changes in that bias could and did influence our meaning making.

While the scale of this experiment was small, it sparked an interesting conversation around what this type of tool could do at a bigger scale. In large-scale organizational scenarios, no one human has the capacity to evaluate the magnitude of data at hand, but involving multiple humans would inherently introduce different biases into the evaluation.

In Pursuit of Clarity

Carving clarity out of ambiguity is, and always has been, IDEO’s special sauce. In a moment where ambiguity is immense, and clarity illusive, we’re prototyping our way to a new set of tools and methods to meet the complexity of the moment. We’re experimenting with new tech-enabled research mediums that match the scale and complexity of the questions we’re being asked to answer, exploring the subtle and subconscious parts of a person’s context, and using AI to wield our biases more meaningfully in human design decisions.

Some deeply human things can only be understood at scale, but scaled research methods aren’t always very human. So, we’re rejecting the false binary of qualitative and quantitative research, embracing new tech-powered mediums to ask new types of questions, scale our notion of context, and leverage bias intentionally in our design work.

Heading 1

Heading 2

Heading 3

Heading 4

Heading 5

Heading 6

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

- Item 1

- Item 2

- Item 3

Unordered list

- Item A

- Item B

- Item C

Bold text

Emphasis

Superscript

Subscript