At IDEO I work on a lot of physical product designs that incorporate displays. I commonly work with UX designers whose tool of choice for rapid iteration of experiences is Figma.

I was recently working on a project that was a kind of kiosk - a touch screen that has external hardware elements. We already had a phenomenal UI prototype built in Figma, and I had built some of the digital hardware elements out with Arduino to deliver an interactive model or an "experience prototype."

I wanted to link the external LED animations to the screen flow, so the LED behaviors are choreographed to the moment in the user flow. I was floored when I finally came to the realization that this was not possible. Protopie did not import the Figma screens properly, and the fantastic software Blokdots (co-created by ex-IDEO'er Olivier Brückner) only allows hardware to talk to the "design view" of Figma as opposed to what I needed which was communication to the "prototype view".

I've got a bit of a soft spot for making prototyping tools for hardware so honestly I was a bit excited that no-one had figured this one out yet. After digging into the Figma API, I realized why Blokdots hadn't done it yet - Figma doesn't support any communication to and from the prototype view in their API. I had to figure out a workaround - even more exciting!

After a few false starts, I confirmed the API couldn't be used, I then had a realization: there is one way that Figma prototypes can talk outside of their session. They can open links. Links can carry information. Talking from Arduino to Figma is easy - Figma can listen for key events.

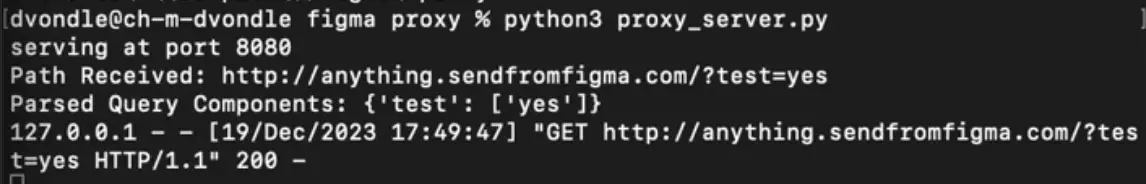

On the advice of IDEO's Jenna Fizel, I tried a scrappy prototype by Setting Firefox as my default browser and using its proxy settings to talk to a simple python proxy server I wrote.

This showed that the path forward could work, but each event I sent would open a new tab in Firefox. I had to make a native app.

But, I have never authored a swift app or made a native macOS app in my entire life. ChatGPT was incredible as a teacher and an assistant in this new undertaking of starting a native MacOS app from the ground up.

I started by asking chatGPT help me set up my Xcode project appropriately. It was awesome at that. Here's an example of some of the basic conversations I had to have to make progress in the app:

I was honestly over the moon how well I could get help to learn what I needed in the moment. Some problems would have taken days to learn enough to resolve, and now I could do it in seconds.

Heading 1

Heading 2

Heading 3

Heading 4

Heading 5

Heading 6

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

- Item 1

- Item 2

- Item 3

Unordered list

- Item A

- Item B

- Item C

Bold text

Emphasis

Superscript

Subscript